lcurves

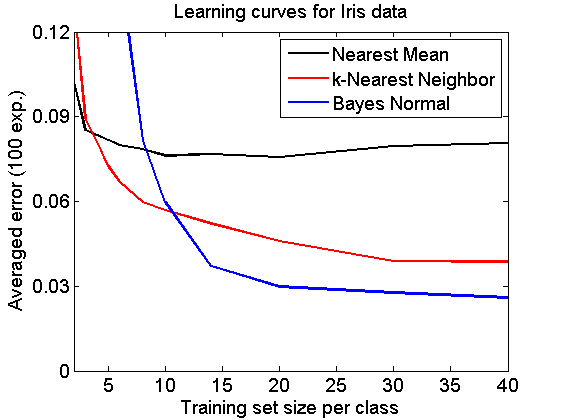

Learning curves for Bayes-Normal, Nearest Mean and Nearest Neighbour on the Iris dataset. Averages over 100 repetitions.

PRTools and PRDataFiles should be in the path

Download the m-file from here. See http://37steps.com/prtools for more.

Contents

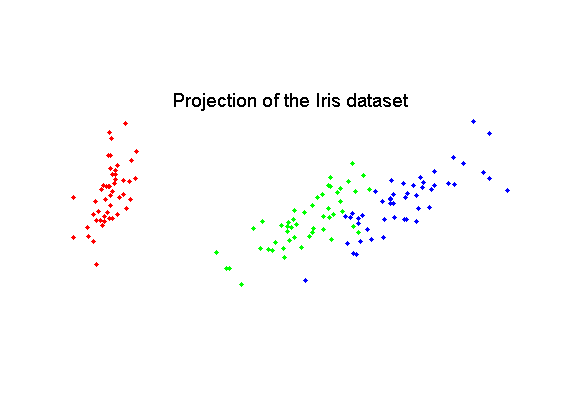

Show datasets and best classifier

delfigs

randreset(1)

a = iris;

a = setprior(a,0);

scattern(a*pcam(a,2));

title('Projection of the Iris dataset')

fontsize(14);

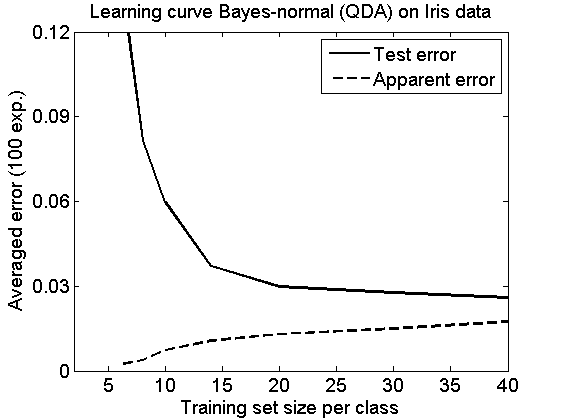

Show learning curve of qdc with apparent error

figure prwarning off; e = cleval(a,qdc,[6 8 10 14 20 30 40],100); plote(e,'nolegend') legend('Test error','Apparent error') title('Learning curve Bayes-normal (QDA) on Iris data') ylabel('Averaged error (100 exp.)'); fontsize(14); axis([2.0000 40.0000 0 0.1200])

The two curves approximate each other to the performance of the best possible QDA model.

Show learning curves of nmc and k-nn

e2 = cleval(a,nmc,[2 3 4 5 6 8 10 14 20 30 40],100);

e3 = cleval(a,knnc,[2 3 4 5 6 8 10 14 20 30 40],100);

figure;

plote({e2,e3,e},'nolegend','noapperror')

title('Learning curves for Iris data')

ylabel('Averaged error (100 exp.)');

legend('Nearest Mean','k-Nearest Neighbor','Bayes Normal')

fontsize(14);

axis([2.0000 40.0000 0 0.1200])

For small training sets more simple classifiers are better. More complicated classifiers (more parameters to be estimated) are expected to perform better for larger training sets. The k-NN classifier improves slowly, but is expected to beat Bayes Normal at some point as it asymptotically approximates the Bayes classifier.

The learning curve for the Nearest Mean classifier shows surprisingly a minimum, a phenomenon discussed by Marco Loog et al.